The goal of this blog entry is to demontrate the use of the pyeDSL to conventiently query BLM-related data from Post Mortem (PM) and NXCALS databases. In particular, we will show how to query for a given period of time around an interesting event in the LHC:

- LHC context information from PM

- BLM signals from PM for plotting and extraction of statistical features (min, max, etc.)

- BLM signals from NXCALS for extraction of statistical features (min, max, etc.)

The PM queries presented below are partially based on previous work of:

- M. Valette: https://gitlab.cern.ch/LHCData/PostMortemData

- M. Dziadosz, R. Schmidt, L. Grob: https://gitlab.cern.ch/UFO/PMdataAnalysis

We found useful a presentation on BLM logging: https://indico.cern.ch/event/20366/contributions/394830/attachments/307939/429949/BLM_6th_Radiation_Workshop_Christos.pdf

0. Import Necessary Packages¶

- Time is a class for time manipulation and conversion

- Timer is a class for measuring time of code execution

- QueryBuilder is a pyeDSL class for a construction of database queries

- PmDbRequest is a low-level class for executing PM queries

- MappingMetadata is a class for retrieving BLM table names for NXCALS query

- BlmAnalysis class provides some helper functions for BLM Analysis

import pandas as pd

from lhcsmapi.Time import Time

from lhcsmapi.Timer import Timer

from lhcsmapi.pyedsl.QueryBuilder import QueryBuilder

from lhcsmapi.pyedsl.FeatureBuilder import FeatureBuilder

from lhcsmapi.dbsignal.post_mortem.PmDbRequest import PmDbRequest

from lhcsmapi.metadata.MappingMetadata import MappingMetadata

import lhcsmapi.analysis.BlmAnalysis as BlmAnalysis

0.1. LHCSMAPI version¶

import lhcsmapi

lhcsmapi.__version__

1. User Input¶

We choose as a start date a beam dump in the LHC. In this case, PM stores a data event with information about LHC context and BLM signals. In order to find PM events, we look one second before and two seconds after the selected beam dump.

NXCALS database performs a continuous logging of BLM signals (with various running sums). Thus, NXCALS can be queried any time.

start_time_date = '2015-11-23 07:28:53+01:00'

t_start, t_end = Time.get_query_period_in_unix_time(start_time_date=start_time_date, duration_date=[(1, 's'), (2, 's')])

2. LHC Context¶

In this part we query LHC context information in order to support BLM analysis. To this end, we employ context_query provided by pyeDSL.

- The context_query() works only in a general-purpose query mode with metadata provided by with_query_parameters() method. Considering the general-purpose mode, the user has to provide system, className, and source.

For the source field one can use a wildcard ('*'). However, the query takes more time as compared to a one where the BLM source is specified. The list of available BLM source can be queried from PM as showed in the following.

with Timer():

QueryBuilder().with_pm() \

.with_duration(t_start=t_start, duration=[(2, 's')]) \

.with_query_parameters(system='BLM', className='BLMLHC', source='*') \

.context_query(contexts=["pmFillNum"]).df

with Timer():

QueryBuilder().with_pm() \

.with_duration(t_start=t_start, duration=[(2, 's')]) \

.with_query_parameters(system='BLM', className='BLMLHC', source='HC.BLM.SR6.C') \

.context_query(contexts=["pmFillNum"]).df

Therefore, for the remaining context queries we always provide the source.

QueryBuilder().with_pm() \

.with_duration(t_start=t_start, duration=[(2, 's')]) \

.with_query_parameters(system='LHC', className='CISX', source='CISX.CCR.LHC.A') \

.context_query(contexts=["OVERALL_ENERGY", "OVERALL_INTENSITY_1", "OVERALL_INTENSITY_2"]).df

QueryBuilder().with_pm() \

.with_duration(t_start=t_start, duration=[(2, 's')]) \

.with_query_parameters(system='LHC', className='CISX', source='CISX.CCR.LHC.GA') \

.context_query(contexts=["BEAM_MODE"]).df

QueryBuilder().with_pm() \

.with_duration(t_start=t_start, duration=[(5, 's')]) \

.with_query_parameters(system='LBDS', className='BSRA', source='LHC.BSRA.US45.B1') \

.context_query(contexts=['aGXpocTotalIntensity', 'aGXpocTotalMaxIntensity']).df

QueryBuilder().with_pm() \

.with_duration(t_start=t_start, duration=[(5, 's')]) \

.with_query_parameters(system='LBDS', className='BSRA', source='LHC.BSRA.US45.B2') \

.context_query(contexts=['aGXpocTotalIntensity', 'aGXpocTotalMaxIntensity']).df

We also developed a method for getting the LHC context shown above with a single method.

lhc_context_df = BlmAnalysis.get_lhc_context(start_time_date)

lhc_context_df

source_timestamp_df = QueryBuilder().with_pm() \

.with_duration(t_start=start_time_date, duration=[(1, 's'), (2, 's')]) \

.with_query_parameters(system='BLM', className='BLMLHC', source='*') \

.event_query().df

source_timestamp_df

3.1.1. List of Beam Loss Monitors¶

Then, we choose one BLM cluster (HC.BLM.SR6.C) and check the names of actual BLMs it contains. For the sake of completeness, the timestamp (although the same) is also provided.

blm_names_df = QueryBuilder().with_pm() \

.with_duration(t_start=t_start, duration=[(2, 's')]) \

.with_query_parameters(system='BLM', className='BLMLHC', source='HC.BLM.SR6.C') \

.context_query(contexts=["blmNames"]).df

blm_names_df

The list of available variables for a PM event is accessed through a low-level lhcsmapi call.

response_blm = PmDbRequest.get_response("pmdata", False, True, pm_rest_api_path="http://pm-api-pro/v2/",

system='BLM', className='BLMLHC', source='HC.BLM.SR6.C',

fromTimestampInNanos=t_start, durationInNanos=int(2e9))

for entry in response_blm['content'][0]['namesAndValues']:

print(entry['name'])

We will query several variables with pyeDSL. Note that pyeDSL does not support yet vectorial definition of signal names for this type of queries.

blm_log_history_df = QueryBuilder().with_pm() \

.with_duration(t_start=t_start, duration=[(2, 's')]) \

.with_query_parameters(system='BLM', className='BLMLHC', source='HC.BLM.SR6.C', signal='pmLogHistory1310ms') \

.signal_query() \

.overwrite_sampling_time(t_sampling=4e-05, t_end=1) \

.dfs[0]

blm_thresholds_df = QueryBuilder().with_pm() \

.with_duration(t_start=t_start, duration=[(2, 's')]) \

.with_query_parameters(system='BLM', className='BLMLHC', source='HC.BLM.SR6.C', signal='pmLogHistory1310msThresholds') \

.signal_query() \

.overwrite_sampling_time(t_sampling=4e-05, t_end=1) \

.dfs[0]

blm_turn_loss_df = QueryBuilder().with_pm() \

.with_duration(t_start=t_start, duration=[(2, 's')]) \

.with_query_parameters(system='BLM', className='BLMLHC', source='HC.BLM.SR6.C', signal='pmTurnLoss') \

.signal_query() \

.overwrite_sampling_time(t_sampling=4e-05, t_end=1) \

.dfs[0]

- Plot a single BLM

df = blm_turn_loss_df[blm_names_df.at[0, 'blmNames']]

df = df[df.index > 1]

df.plot(figsize=(15,7))

3.2. NXCALS¶

In the next step we query NXCALS for BLM running sum 1.

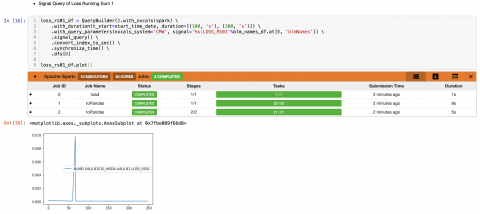

- Signal Query of Loss Running Sum 1

loss_rs01_df = QueryBuilder().with_nxcals(spark) \

.with_duration(t_start=start_time_date, duration=[(100, 's'), (200, 's')]) \

.with_query_parameters(nxcals_system='CMW', signal='%s:LOSS_RS01'%blm_names_df.at[0, 'blmNames']) \

.signal_query() \

.convert_index_to_sec() \

.synchronize_time() \

.dfs[0]

loss_rs01_df.plot()

4. Feature Query All BLMs for a Single Crate¶

Due to the large number of BLMs, the query and plotting of them is impractical in this environment. In fact there are dedicated applications for this purpose. In the following, we will demonstrate the feature engineering (mean, std, min, max value) for the BLM signals stored in PM and NXCALS.

features = ['mean', 'std', 'max', 'min']

4.1. Post Mortem¶

PM does not support calculation of features on the database (which is profitable from the communication and computation time perspective). Therefore, we need to query the raw signals and afterwards perform feature engineering.

- pmLogHistory1310msThresholds

blm_thresholds_features_row_df = FeatureBuilder().with_multicolumn_signal(blm_thresholds_df) \

.calculate_features(features=features, prefix='threshold') \

.convert_into_row(index=lhc_context_df.index) \

.dfs

- pmTurnLoss

blm_turn_loss_features_row_df = FeatureBuilder().with_multicolumn_signal(blm_turn_loss_df) \

.calculate_features(features=features, prefix='turn_loss') \

.convert_into_row(index=lhc_context_df.index) \

.dfs

4.2. NXCALS¶

NXCALS ecosystem brings the cluster computing capabilities to the logging databases. It allows developing analysis code witht the Spark API. The pyeDSL encapsulates Spark API and provides a coherent feature engineering query. In other words, features are calculated on the cluster where the data is stored unlike the PM for which the calculation is performed locally. The table of BLM signal names was prepared by Christoph Wiesener.

- Get signal names from MappingMetadata

blm_nxcals_df = MappingMetadata.get_blm_table()

blm_nxcals_df['LOSS_RS01'] = blm_nxcals_df['Variable Name'].apply(lambda x: '%s:LOSS_RS01' % x)

blm_nxcals_df['LOSS_RS09'] = blm_nxcals_df['Variable Name'].apply(lambda x: '%s:LOSS_RS09' % x)

blm_nxcals_df.head()

- Run a feature query with pyeDSL: Running Sum 1

loss_rs01_features_row_df = QueryBuilder().with_nxcals(spark) \

.with_duration(t_start=start_time_date, duration=[(100, 's'), (200, 's')]) \

.with_query_parameters(nxcals_system='CMW', signal=list(blm_nxcals_df['LOSS_RS01'])) \

.feature_query(features=features) \

.convert_into_row(lhc_context_df.index) \

.df

loss_rs01_features_row_df

- Run a feature query with pyeDSL: Running Sum 9

loss_rs09_features_row_df = QueryBuilder().with_nxcals(spark) \

.with_duration(t_start=start_time_date, duration=[(100, 's'), (200, 's')]) \

.with_query_parameters(nxcals_system='CMW', signal=list(blm_nxcals_df['LOSS_RS09'])) \

.feature_query(features=features) \

.convert_into_row(lhc_context_df.index) \

.df

loss_rs09_features_row_df

5. Final Row¶

Eventually, we put together all rows into a single one that can be stored in the persistent storage. The code of this notebook can be extracted into a job collecting historical data representing BLM signals during the operation of the LHC.

pd.concat([lhc_context_df, blm_thresholds_features_row_df, blm_turn_loss_features_row_df, loss_rs01_features_row_df, loss_rs09_features_row_df], axis=1)

- Log in to post comments