In this post, we discuss a proposal on integration of Hardware Commissioning (HWC) notebooks with AccTesting infrastructure. The HWC notebooks provide a step-by-step process of query, analysis, and assertion of relevant signals stored in PM and NXCALS databases. The most up-to-date list of notebooks is available at https://cern.ch/sigmon. The AccTesting framework orchestrates execution of a HWC test on the physical hardware as well as analysis of the test results stored in the respective databases. In this context, the automatic triggering of a HWC notebook and signature of the analysis is considered. The adopted rationale is to rely on IT-supported services and creating own software solutions only if absolutely necessary. The proof-of-concept application should serve as a starting point for a discussion and definition of functional requirements with the involved parties. To conclude, the guiding principles are:

- the same notebook is used for both a manual (interactive) and an automatic (on-demand) analysis of a HWC test

- the analysis notebooks are prepared by the Signal Monitoring team in close contact with MP3 members and hardware experts

- the analysis notebooks are executed on the infrastructure provided by IT; the performance for both execution modes should be comparable

- the integration with AccTesting requires support of MPE/MS; in particular two steps are required: (i) the triggering of our analysis pipeline, (ii) and test signature

Manual and Automatic Execution of Notebooks¶

For as much as possible, the HWC tests should be executed automatically. In case of a failed test, a manual analysis by hardware experts is required. To this end, the same notebook is executed in either autmatic or manual mode.

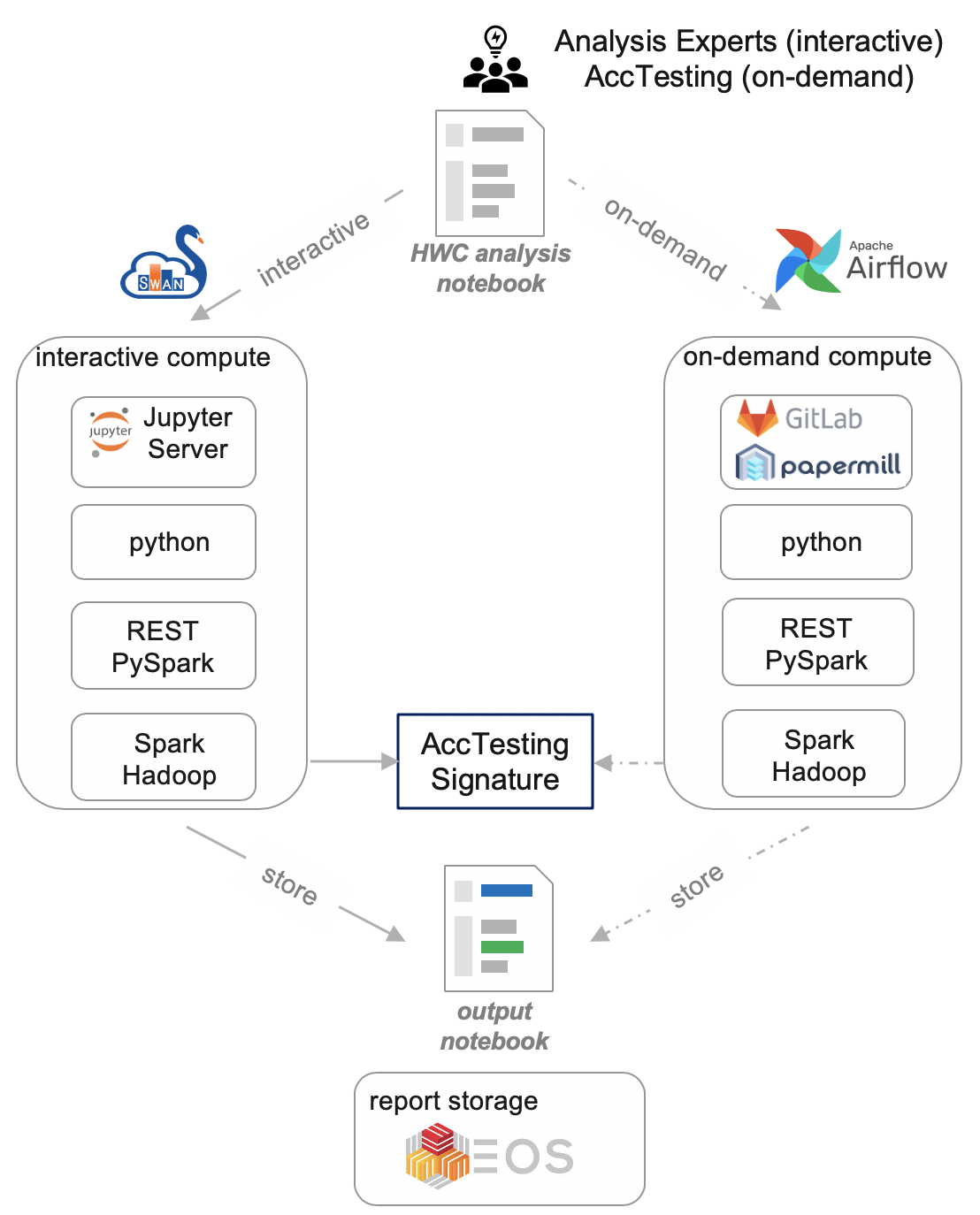

The rectangles with round and regular corners denote modules supported by IT-DB and TE-MPE groups, respectively. The continuous lines represent manual, interactive notebook execution while the dashed lines represent on-demand notebook execution.

Automatic Execution Workflow¶

In the following we discuss the protocol and parameters needed to execute a HWC notebook. We chose a top-down approach, i.e., from an AccTesting trigger to test signature, report storage, and notification. The workflow is illustrated with an analysis of PIC2 test for the LHC main dipole circuit (RB); https://gitlab.cern.ch/LHCData/lhc-sm-hwc/-/blob/master/rb/AN_RB_PIC2.ipynb.

Triggering Test Analysis¶

The first step is to trigger an HWC analysis notebook by AccTesting. To this end, Airflow REST API is called with parameters for the HWC notebook. The documentation is available at https://airflow.apache.org/docs/stable/rest-api-ref.html

The table below summarizes a minimal set of parameters required to trigger an HWC notebook.

| Parameter | Variable | Description |

|---|---|---|

| test type | test_type | Type of HWC test, e.g., PIC2 |

| circuit name | circuit_name | Name of the circuit under test, e.g., RB.A12 |

| start time | start_time | The start time of a test, e.g., 2015-01-13 16:59:11 |

| end time | end_time | The end time of a test, e.g., 2015-01-13 17:15:46 |

Example of a bash (curl) and python (requests) triggering are shown below.

!curl -X POST \ https://lhc-sm-scheduler.web.cern.ch/api/experimental/dags/rest_trigger_hwc_dag/dag_runs \

-H 'Cache-Control: no-cache' \

-H 'Content-Type: application/json' \

-d '{"conf":"{\"test_type\":\"PIC2\", \"circuit_name\":\"RB.A12\", \"start_time\":\"2015-01-13 16:59:11\", \"end_time\":\"2015-01-13 17:15:46\"}"}'

import requests

import json

conf = {'test_type': 'PIC2', 'circuit_name': 'RB.A12', 'start_time': '2015-01-13 16:59:11', 'end_time': '2015-01-13 17:15:46'}

conf_json = json.loads(json.dumps(conf))

result = requests.post("https://lhc-sm-scheduler.web.cern.ch/api/experimental/dags/rest_trigger_hwc_dag/dag_runs", data=json.dumps({"conf": conf_json}))

print(result.content.decode('utf-8'))

Triggering of a Parameterized Notebook¶

A detailed description on how to trigger a notebook with GitLab Runner will be provided in the due time. The GitLab REST API documentation is available at: https://docs.gitlab.com/ee/api/

The table below summarizes a minimal set of parameters required to trigger an HWC notebook.

| Parameter | Variable | Description |

|---|---|---|

| notebook name | NOTEBOOK_NAME | Type of HWC test, e.g., PIC2 |

| circuit name | CIRCUIT_NAME | Name of the circuit under test, e.g., RB.A12 |

| start time | START_TIME | The start time of a test, e.g., 2015-01-13 16:59:11 |

| end time | END_TIME | The end time of a test, e.g., 2015-01-13 17:15:46 |

Example of a bash (curl) and python (requests) triggering are shown below.

!export TOKEN=0a53a152068d7fd59cb67c7b010807; curl -X POST -F token=$TOKEN \

-F "variables[CLUSTER]=hadoop-nxcals" -F "variables[CIRCUIT_NAME]=RB.A12"\

-F "variables[NOTEBOOK_NAME]=rb/AN_RB_PIC2" -F

-F "variables[START_TIME]=2015-01-13 16:59:11" -F "variables[END_TIME]=2015-01-13 17:15:46" \

-F ref=master https://gitlab.cern.ch/api/v4/projects/90588/trigger/pipeline

import subprocess

command = ['curl', '-X', 'POST', '-F', 'token=0a53a152068d7fd59cb67c7b010807',

'-F', 'variables[CLUSTER]=hadoop-nxcals', '-F', 'variables[CIRCUIT_NAME]=RB.A12',

'-F', 'variables[NOTEBOOK_NAME]=rb/AN_RB_PIC2', '-F',

'-F', 'variables[START_TIME]=2015-01-13 16:59:11', '-F' 'variables[END_TIME]=2015-01-13 17:15:46',

'-F', 'ref=master',

'https://gitlab.cern.ch/api/v4/projects/90588/trigger/pipeline']

result = subprocess.run(command, stdout=subprocess.PIPE).stdout.decode('utf-8')

print(result)

After receiving the execution request from AccTesting through Airflow REST API, an appropriate notebook is selected and executed. On the Airflow side, the following DAG is responsible for this part; its code is

import subprocess

import airflow

from airflow.models import DAG

from airflow.operators.python_operator import PythonOperator

# Based on https://medium.com/@ntruong/airflow-externally-trigger-a-dag-when-a-condition-match-26cae67ecb1a

dag = DAG(

dag_id='rest_trigger_hwc_dag',

default_args={'start_date': airflow.utils.dates.days_ago(2), 'owner': 'AccTesting'},

schedule_interval=None,

)

def trigger_notebook_execution(*args, **kwargs):

print("Received a REST message: {}".

format(kwargs['dag_run'].conf))

circuit_type = kwargs['dag_run'].conf['circuit_name'].split('.')[0]

test_type = kwargs['dag_run'].conf['test_type']

circuit_name_var = 'variables[CIRCUIT_NAME]=' + kwargs['dag_run'].conf['circuit_name']

notebook_name_var = 'variables[NOTEBOOK_NAME]={}/AN_{}_{}'.format(circuit_type.lower(), circuit_type, test_type)

start_time_var = 'variables[START_TIME]=' + kwargs['dag_run'].conf['start_time']

end_time_var = 'variables[END_TIME]=' + kwargs['dag_run'].conf['end_time']

command = ['curl', '-X', 'POST', '-F', 'token=0a53a152068d7fd59cb67c7b010807',

'-F', 'variables[CLUSTER]=hadoop-nxcals',

'-F', circuit_name_var,

'-F', notebook_name_var,

'-F', start_time_var,

'-F', end_time_var,

'-F', 'ref=master',

'https://gitlab.cern.ch/api/v4/projects/90588/trigger/pipeline']

print(' '.join(command))

return subprocess.run(command, stdout=subprocess.PIPE).stdout.decode('utf-8')

run_this = PythonOperator(

task_id='trigger_notebook_execution',

python_callable=trigger_notebook_execution,

provide_context=True,

dag=dag,

)

Execution of a Parameterized Notebook¶

The Airflow DAG triggers a GitLab pipeline executing notebook on the Spark cluster. The script execute_notebook.py (https://gitlab.cern.ch/LHCData/lhc-sm-hwc/-/blob/master/execute_notebook.py) takes five parameters and is executed in the GitLab pipeline of .gitlab-ci.yml (https://gitlab.cern.ch/LHCData/lhc-sm-hwc/-/blob/master/.gitlab-ci.yml) as

# Execute the notebook, convert to pdf and copy the pdf to EOS folder

- echo $CLUSTER

- echo $NOTEBOOK_NAME

- echo $CIRCUIT_NAME

- echo $START_TIME

- echo $END_TIME

- python3 execute_notebook.py $NOTEBOOK_NAME $CIRCUIT_NAME $START_TIME $END_TIME

Inside the execute_notebook.py script, papermill executes notebook in a parametric way as:

# parameters

import time, sys

ts = str(int(time.time()))

notebook = sys.argv[1] + '.ipynb'

output_ipynb = ts + '-' + notebook.split('/')[-1] + '.ipynb'

output_pdf = ts + '-' + notebook.split('/')[-1] + '.pdf'

# execute notebook

import papermill as pm

pm.execute_notebook(

notebook,

output_ipynb,

parameters=dict(notebook_execution_mode='automatic', circuit_name=sys.argv[2],

start_time=sys.argv[3], end_time=sys.argv[4])

)

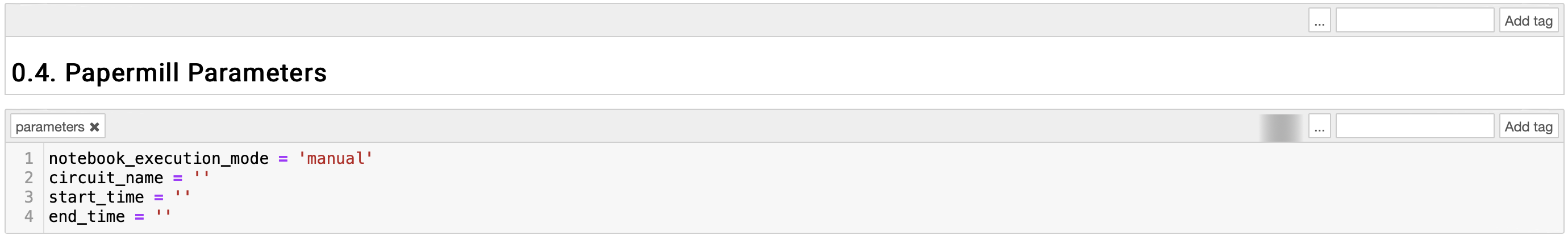

Eventually, the executed notebook has a dedicated cell with a tag parameters, which accepts parameters passed by papermill.

For more details on papermill, please consult the documentation: https://papermill.readthedocs.io/en/latest/

At the end of a notebook execution, the test analysis result is provided and should be communicated back to the AccTesting via a Signature, to the MP3 members and hardware experts as a report and an e-mail notification.

AccTesting Signature¶

- to be done in cooperation with MPE/MS

Report Storage¶

The executed notebook is converted into a PDF file and stored on EOS.

Expert Notification¶

- to be done in Airflow

- Log in to post comments